Understanding RAG

In previous modules, you built a strong foundation in AI engineering learning how LLMs work, setting up Gemini, making API calls, and mastering prompt engineering. You also explored structured interactions using function calling and JSON mode to build smarter assistants.

Now, in Understanding RAG, we take the next big step: giving LLMs access to external knowledge. You’ll learn what Retrieval-Augmented Generation (RAG) is, why it matters, and how it changes the way we build AI systems making them more powerful and context-aware.

Introduction to RAG

-

RAG stands for Retrieval-Augmented Generation. It’s a simple approach in AI that pairs a retrieval part (to find useful information) with a generation part (a language model that writes the reply) so it can give more accurate and relevant answers.

-

The motivation: large language models (LLMs) are powerful, but they only “know” as much as their training data and parameters. They may not include domain-specific, up-to-date, or proprietary information. RAG lets the LLM consult an external knowledge source dynamically.

-

In effect, RAG gives the model “open-book” access: instead of relying purely on memory (the model’s weights), it retrieves relevant facts, then generates a response using both the prompt and the retrieved content.

Core Components of a RAG System

1. Knowledge Base

-

What It Is: A repository containing structured or unstructured information that the system can access.

-

Examples:

- Document collections (PDFs, articles, manuals)

- Databases with structured information

- APIs providing real-time data

- Web content or internal company knowledge

-

Purpose: Serves as the external source of information that the system retrieves data from to answer user queries.

2. Retrieval System

-

What It Is: The mechanism that searches the knowledge base to find relevant information.

-

Key Functions:

- Converting user queries into searchable formats

- Matching queries against available information

- Ranking results by relevance

- Returning the most pertinent content

-

Purpose: Ensures that the system retrieves the most relevant information to answer the user's query effectively.

3. Context Integration

-

What It Is: The process of combining the retrieved information with the user's original query.

-

Key Functions:

- Formatting retrieved content for the language model

- Ensuring context fits within token limits

- Maintaining query intent while adding supporting information

-

Purpose: Provides the language model with comprehensive context to generate accurate and relevant responses.

4. Generation Engine

-

What It Is: The large language model (LLM) that processes both the original query and the retrieved context to produce the final response.

-

Key Functions:

- Synthesizes information from multiple sources

- Maintains conversational flow

- Applies reasoning to generate insights

- Ensures response relevance and accuracy

-

Purpose: Generates a coherent and contextually appropriate response to the user's query.

Real-World Use Cases of RAG

1. Customer Support Systems

- Example: A user asks, "How do I reset my password for the new mobile app?"

- RAG in Action: The system retrieves the latest password reset procedures from updated documentation.

- Benefit: Users receive current instructions, even after app updates, ensuring accurate and timely assistance.

2. Research and Analysis

- Example: A researcher inquires, "What are the latest trends in renewable energy investment?"

- RAG in Action: The system retrieves recent industry reports, news articles, and market data.

- Benefit: Provides comprehensive, up-to-date information synthesis, aiding informed decision-making.

3. Internal Knowledge Management

- Example: An employee asks, "What's our company policy on remote work equipment?"

- RAG in Action: The system retrieves current HR policies and recent policy updates.

- Benefit: Employees access accurate, up-to-date policy information, enhancing internal communication.

4. Technical Documentation

- Example: A developer queries, "How do I implement OAuth 2 in our API framework?"

- RAG in Action: The system retrieves framework-specific documentation and recent examples.

- Benefit: Provides precise, framework-relevant implementation guidance, streamlining development processes.

5. Content Creation

- Example: A content creator requests, "Write a blog post about cybersecurity best practices for small businesses."

- RAG in Action: The system retrieves the latest cybersecurity threats, current best practices, and recent case studies.

- Benefit: Delivers accurate, timely content backed by current research, enhancing content quality.

Key Benefits of RAG

1. Accuracy & Reliability

- RAG systems generate responses based on verified, up-to-date sources.

- This reduces errors and ensures information is current, especially important in fields like healthcare or finance.

2. Timeliness

- RAG can access real-time data without needing to retrain the model.

- This allows AI systems to provide the latest information, such as current events or recent research findings.

3. Cost-Effectiveness

- Instead of retraining large models, RAG uses existing models with external data sources.

- This approach saves on computational resources and time, making it more affordable for businesses.

4. Transparency

- RAG systems can cite their sources, showing where information comes from.

- This builds trust with users, as they can verify the information themselves.

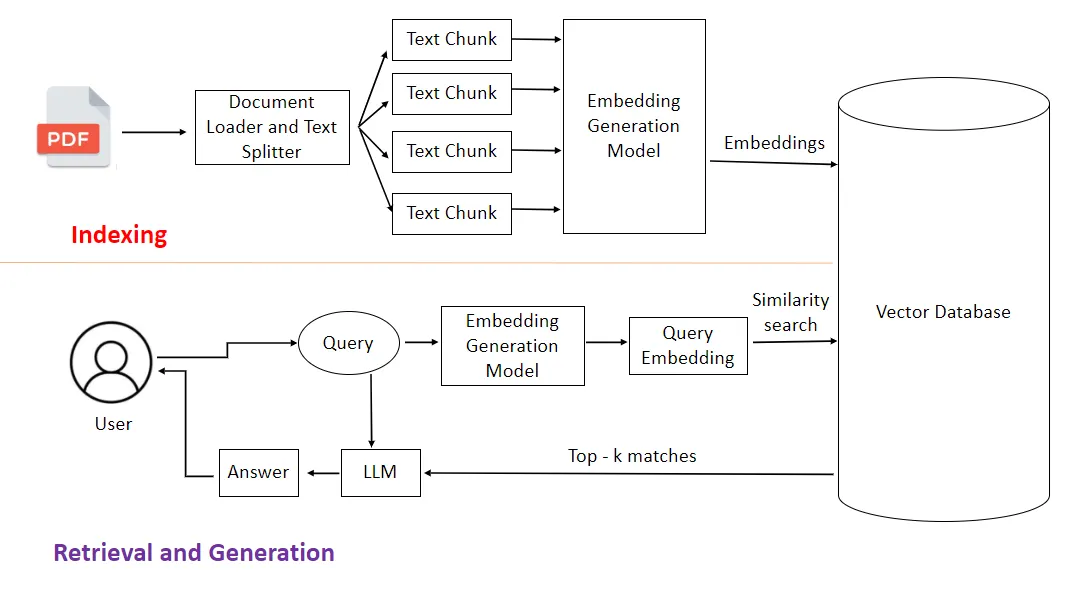

RAG System Workflow

INDEXING (prepare the knowledge)

-

Source file (e.g., PDF)

- This is any document(unstructured data) you want the system to know about: PDFs, web pages, manuals, spreadsheets, etc.

- Think: a book you want your assistant to read.

-

Document Loader & Text Splitter

- What it does: Loads the document and breaks it into smaller pieces called chunks (paragraphs or sections).

- Why: LLMs and vector search work better with short chunks than with huge documents.

- tip: split by paragraphs or every ~200–500 words; keep logical sentences together.

-

Text Chunks

- These are the small pieces of text produced by the splitter. Each chunk will be treated as an independent unit of knowledge.

- Analogy: index cards with one idea per card.

-

Embedding Generation Model

- What it does: Takes each text chunk and converts it into a vector (a list of numbers) that captures the chunk’s meaning.

- Why: Vectors let us compare meaning: “Which chunks are most similar to this question?”

- tip: use ready-made embedding models (OpenAI, sentence-transformers

all-MiniLM, etc.).

-

Embeddings → Vector Database

- What it does: Store each chunk’s vector (and a reference to the original text) in a vector database (FAISS, Pinecone, Chroma, Milvus, etc.).

- Why: The vector DB lets us quickly find chunks similar to a query vector.

- tip: store metadata (source, page number, date) with each chunk so you can show citations later.

RETRIEVAL & GENERATION (answering a user)

-

User → Query

- The user types a question, e.g.: “How do I reset my password in the new app?”

- This is the input we need to answer.

-

Embedding Generation Model (for Query)

- What it does: Convert the user’s question into a vector using the same embedding model used in indexing.

- Why: Query vectors and chunk vectors must be in the same “space” so we can compare them.

-

Query Embedding → Similarity Search

- What it does: The vector DB finds the stored chunk vectors most similar to the query vector.

- Result: a list of top-k matches (the most relevant chunks).

-

Top-k matches → LLM (Augmentation)

-

What happens next: You take those top chunks (their text) and combine them with the user’s question into a prompt for the LLM.

-

Example prompt pattern:

Use the following excerpts to answer the question. If the answer is not in the excerpts, say you don’t know. [chunk 1] [chunk 2] [chunk 3] Question: <user question> Answer: -

Why: This is the “augmentation” step giving the LLM the retrieved facts to ground its answer.

-

-

LLM → Answer

- The LLM generates the final answer using the question + the retrieved chunks.

- It may quote or paraphrase chunks, synthesize several sources, or say “I don’t know” if info is missing (if you prompt it to).

- tip: explicitly instruct the LLM to use only the provided documents and cite them when possible.

-

Answer → User

- The system returns the LLM’s answer back to the user (optionally include source citations or snippet links).

FAQ

Summary

RAG represents a fundamental shift in how we build AI applications, moving from static knowledge models to dynamic, source -aware systems. By combining retrieval and generation, RAG enables you to build applications that are accurate , current , and transparent while remaining cost-effective and maintainable.

The four core components knowledge base, retrieval system, context integration, and generation engine work together to create systems that can access vast amounts of information and synthesize it into helpful responses. While RAG has limitations around retrieval quality and lat ency, its benefits make it essential for most production AI applications.

In the next tutorial, we'll dive into text embeddings, the technology that powers modern retrieval systems an d makes RAG possible.