What Are Guardrails in AI Agents and Why Do They Matter?

Artificial intelligence agents are rapidly moving from experimental demos to enterprise-ready platforms. They are no longer just chatbots answering FAQs, today’s AI agents can reason, plan, and take autonomous actions such as making API calls, booking appointments, processing financial transactions, and even coordinating with other agents.

This evolution brings incredible opportunities for productivity and automation. But with this autonomy comes risk. What if an AI agent accidentally leaks sensitive customer data? What if it executes an unauthorized transaction? What if it generates harmful or offensive responses?

This is where guardrails come in. Guardrails are the invisible safety systems that ensure AI agents remain useful, safe, and aligned with business and ethical standards. In this article, we’ll dive deeper into what guardrails are, how they work, and why they are essential for the future of AI.

Defining Guardrails in AI Agents

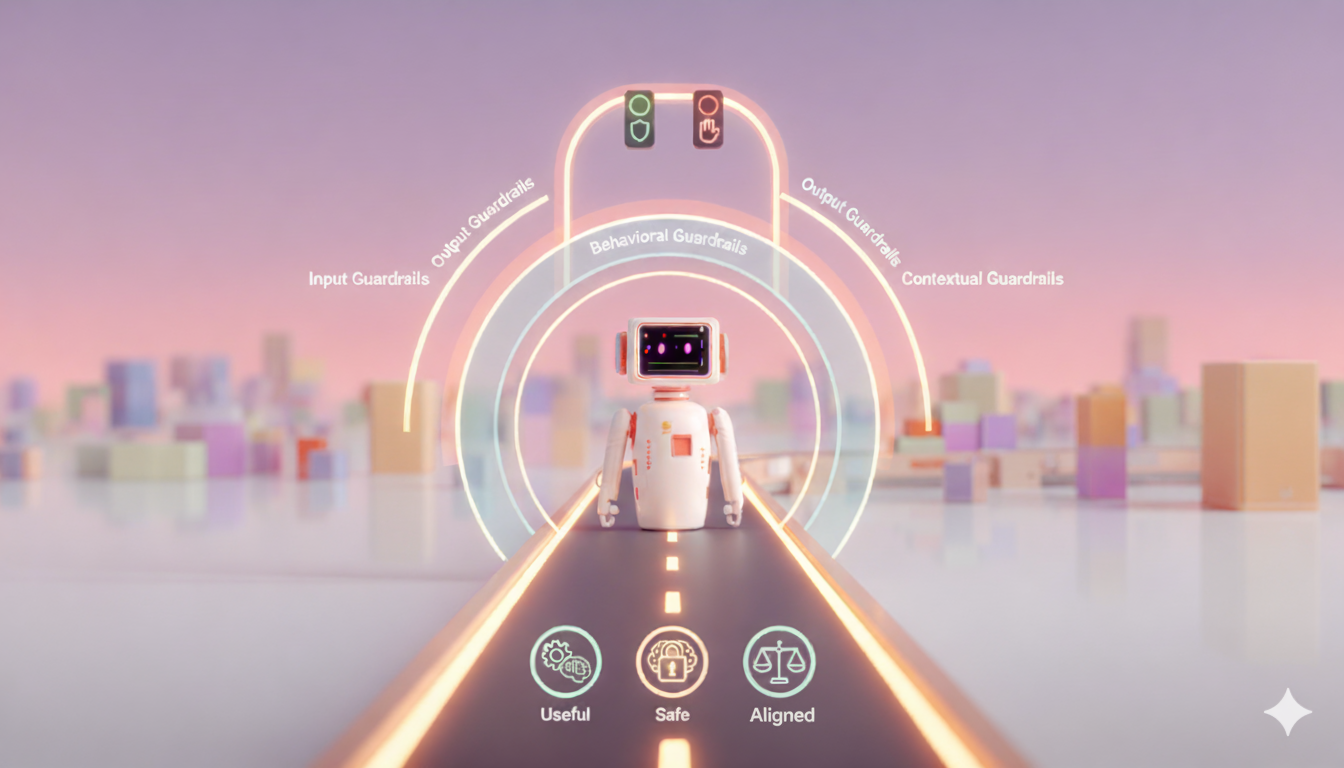

At a high level, guardrails are rules, constraints, and safety mechanisms built into an AI agent platform to keep the agent’s behavior predictable, controlled, and trustworthy.

Think of them like the lane markings, traffic signals, and speed limits on a highway. They don’t stop cars from driving fast or far, but they reduce the likelihood of accidents. Similarly, guardrails don’t prevent AI agents from being powerful, they ensure that power is used responsibly.

Guardrails can take multiple forms:

- Input Guardrails – controlling what the agent accepts as input. Example: rejecting personally identifiable information (PII) in a customer query.

- Output Guardrails – filtering or adjusting the agent’s responses. Example: preventing toxic or biased language.

- Behavioral Guardrails – defining what actions an agent is allowed to perform. Example: restricting access to financial APIs unless approved.

- Contextual Guardrails – limiting the agent’s operational scope. Example: only allowing data access from approved sources.

These layers combine to form a defense-in-depth model, ensuring that even if one layer fails, others will still enforce safety.

Why Do AI Agents Need Guardrails?

As AI agents grow more autonomous, the risks increase proportionally. Here are the major reasons guardrails are critical:

-

Prevent Harmful Outputs LLMs (large language models) are powerful but not infallible. Without guardrails, they can generate biased, toxic, or factually incorrect outputs. In sensitive industries like healthcare or finance, this is unacceptable.

-

Protect Sensitive Data Businesses must comply with strict data privacy regulations (GDPR, HIPAA, PCI-DSS, etc.). Guardrails prevent agents from accidentally storing, exposing, or mishandling sensitive information.

-

Maintain Business Alignment An autonomous agent may discover creative solutions, but not all of them align with a company’s brand, ethics, or legal obligations. Guardrails ensure that agents stay within approved workflows.

-

Enable User Trust For end-users and enterprises alike, trust is everything. Without strong guardrails, adoption of AI agents will stall because stakeholders will fear “black box” behaviors.

-

Regulatory Compliance Many industries are already under AI governance scrutiny. Guardrails help organizations demonstrate proactive compliance, reducing the risk of fines or regulatory action.

How Guardrails Work in Practice

Guardrails can be implemented at multiple levels within an AI agent platform. Some common methods include:

-

Rule-Based Filters Simple but effective. For example: blocking the inclusion of PII like social security numbers in inputs or outputs.

-

Moderation Models Using specialized AI systems to detect harmful, biased, or unsafe outputs before they reach users.

-

Role- and Permission-Based Controls Just like employees don’t all have the same system permissions, agents shouldn’t either. Guardrails enforce what an agent is allowed to do.

-

Human-in-the-Loop (HITL) Approvals For high-stakes tasks (like executing a financial transaction), the agent can request human approval before proceeding.

-

Sandboxing and Constrained Tool Access Agents often call APIs or external tools. Guardrails can sandbox these interactions, ensuring they only access approved tools or datasets.

-

Monitoring and Feedback Loops Guardrails aren’t “set and forget.” Continuous monitoring and feedback allow them to adapt to evolving threats and edge cases.

Guardrails in Action: Industry Examples

- Finance → AI agents can draft reports, automate audits, or detect fraud. Guardrails ensure they don’t execute trades without authorization or expose sensitive financial data.

- Healthcare → Agents can assist doctors with summarizing records. Guardrails prevent them from offering unverified medical advice or mishandling patient data.

- Retail & E-commerce → Agents can personalize recommendations. Guardrails stop them from suggesting offensive content or misusing customer demographics.

- Customer Support → Agents can handle high volumes of queries. Guardrails ensure tone consistency, professionalism, and adherence to company policies.

The Business Case for Guardrails

Some leaders may ask: “Why should we spend resources on guardrails? Won’t they slow down the system?”

The truth is the opposite. Guardrails are not friction, they are trust enablers.

- They reduce legal risk by ensuring compliance with regulations.

- They protect brand reputation by avoiding embarrassing or harmful agent outputs.

- They accelerate adoption because customers and enterprises are more likely to trust a system that is visibly safe and controlled.

- They improve ROI by preventing costly failures, downtime, or legal actions.

Without guardrails, AI adoption at scale simply won’t happen.

The Future of Guardrails in AI Agents

Today, most guardrails are static rules or filters. But as AI agents evolve, guardrails themselves will need to become adaptive, context-aware, and self-learning.

Some emerging trends include:

- Dynamic Guardrails → adjusting safety thresholds depending on context (e.g., stricter for financial workflows than for internal brainstorming).

- AI-Driven Governance → systems that continuously monitor agent behavior and refine guardrails automatically.

- Cross-Agent Accountability → in multi-agent ecosystems, agents may check and balance each other.

- Explainable Guardrails → making guardrail decisions transparent so humans understand why an agent was blocked.

The future will not be about limiting AI agents, but about giving them the right frameworks to operate safely and responsibly at scale.

Conclusion

Guardrails are not an afterthought or a “nice to have.” They are the foundation of safe, trustworthy AI agent platforms. Without them, the risks of misuse, errors, or harm outweigh the benefits. With them, AI agents can transform industries with confidence.

As businesses explore AI adoption, the critical question is not just “What can the agent do?” but “What guardrails are in place to make sure it does it safely?”

For enterprises, startups, and developers alike, the message is clear: guardrails are the key to unlocking responsible autonomy in AI agents.

Software engineer with 14+ years of experience, guided by a product mindset and a continuous improvement mindset. I’m now focused on building AI-powered products, aiming to deliver real value through iteration, feedback, and growth.