Context Engineering Series #1: Beating Context Rot with Compaction

This is the first post in a series on context engineering. We'll start with the most practical lesson from our own journey: when and why context compaction becomes the simplest, highest-impact fix for long-running agent conversations.

Why Context Engineering Matters

Context engineering is the discipline of deciding what information goes into the LLM context window, in what format, and when. It's not just prompt writing; it's the orchestration layer that keeps agents reliable as conversations and tool usage grow.

As Andrej Karpathy put it:

Context engineering is the art of filling the context window with just the right information for the next step (source)

For AI agents, this matters because reliability degrades as the conversation length grows. The longer the agent runs, the higher the risk of:

- Instruction-following drift

- Tool calling errors

- Hallucinations in the absence of grounded data

- Unpredictable behavior caused by irrelevant or stale tokens

If you're new to the term, we highly recommend the excellent write-ups by the Anthropic, Manus & LangChain teams, plus our own primers on context engineering: building effective AI agents and building accurate, reliable AI agents. They lay a great foundation for what context engineering is and why it's becoming a core engineering competency.

Our Journey Into Context Engineering

One important lesson: you don't need context engineering from day one. For relatively simple use cases, modern LLMs can handle large context windows just fine. It's only when your system crosses a certain complexity threshold that context engineering becomes necessary.

Signs We Needed It

We started seeing a clear pattern: instruction following, tool calling accuracy, and response quality declined after a certain conversation length. No amount of system prompt tweaks could stabilize the output. This was classic context rot.

The Use Case

We were building an AI agent for a climate journalism organization. The client had verified, cleaned climate datasets stored in an RDBMS. The agent translated natural-language questions into tool calls (via our MCP server), and the tools returned JSON.

That JSON was accurate - but very expensive in tokens.

Profiling What Was in the Context Window

The first step was to profile what the model actually saw each turn. The context window contained:

- System prompt

- Tool definitions

- User message

- LLM responses (starting from message 2)

- Tool call requests

- Tool call responses

We quickly noticed that tool responses were swallowing the majority of the budget - especially long JSON payloads from data queries.

Eliminating the Waste: Why We Chose Compaction

There are many context engineering techniques (compaction, reduction, sub-agent architectures). For this post, we focus on compaction because it was the fastest, least invasive win.

The Compaction Insight

Tool responses were the culprit. JSON is token-inefficient (braces, quotes, whitespace), and our tool results kept piling up. The simplest fix was to stop carrying old tool payloads forward in the next turn and replace them with a compact placeholder.

The Solution: Replace Old Tool Results With a Placeholder

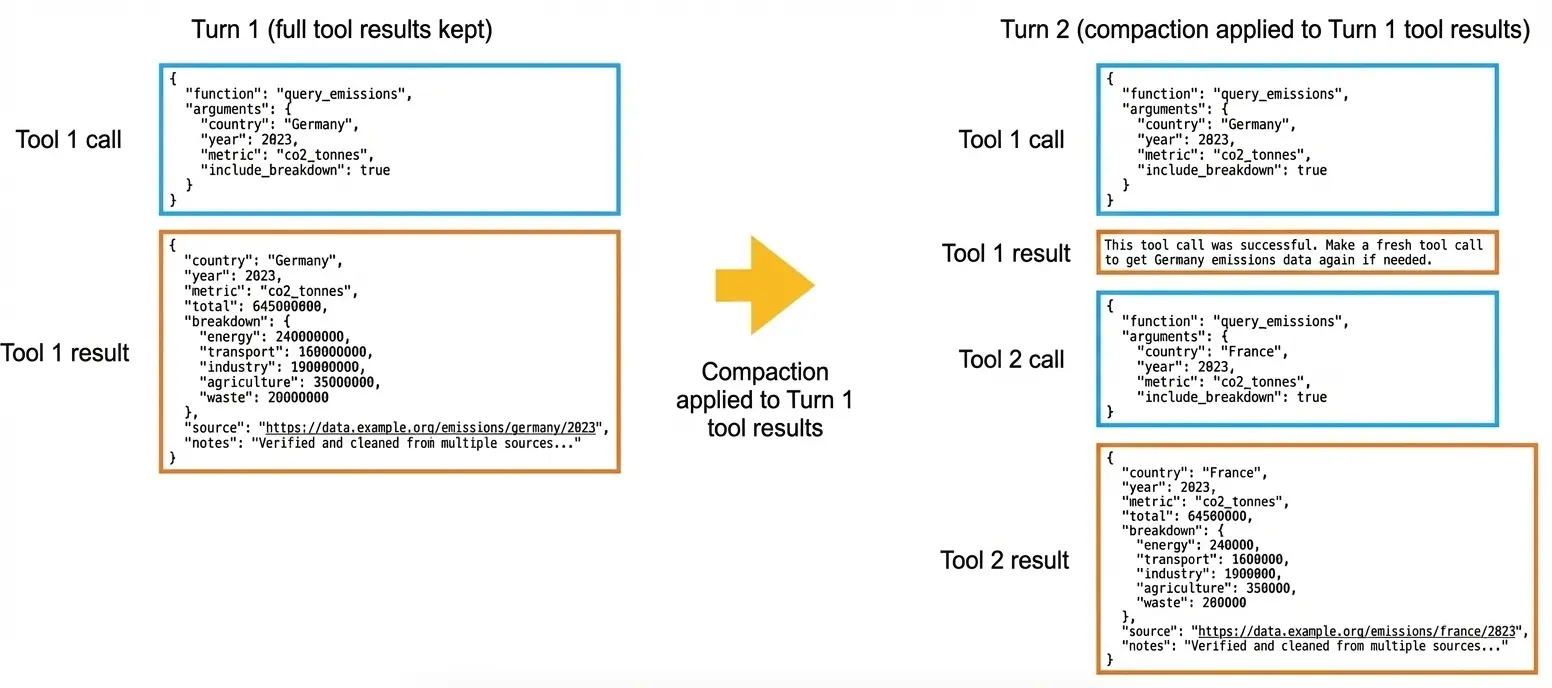

After a tool call completes, we keep the raw JSON for the UI, but we replace it in the next LLM request with a short, static placeholder. If the agent needs the data again, it can re-call the tool.

"This tool call was successful. Please re-run the tool if you need access to the data again."

Example Interaction (Compaction From Turn 2 Onward)

Below is a simplified, screenshot-style view showing compaction from Turn 2 onward in a conversation where an agent is querying national emissions data for different countries.

The Impact (Token Budget)

Here's a simple before/after view of the token budget for Turn 1:

| Component | Before (tokens) | After (tokens) |

|---|---|---|

| System prompt | 5,000 | 5,000 |

| Tool descriptions | 2,500 | 2,500 |

| Tool request | 50 | 50 |

| Tool response | 5,000 | 100 |

| LLM response | 200 | 200 |

| Total | 12,750 | 7,850 |

This change saves ~4,900 tokens in Turn 1 (about a 38% reduction). The tool response itself shrinks by ~98% (5,000 -> 100), freeing up room for new user turns while reducing context rot. If you want to go deeper on cost impact, see how smart context engineering cuts AI costs.

For longer conversations with many tool calls, the impact compounds. With 10 tool calls, the tool responses alone drop from ~50,000 tokens (10 x 5,000) to ~1,000 tokens (10 x 100), saving ~49,000 tokens.

| Scenario | Tool response tokens (before) | Tool response tokens (after) | Savings |

|---|---|---|---|

| 10 tool calls | 50,000 | 1,000 | 49,000 |

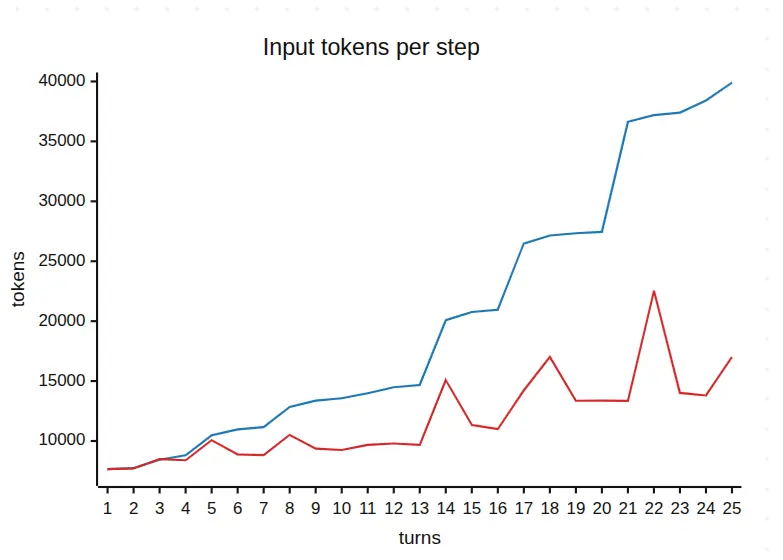

Real Conversation Results (Per-Step Input Tokens)

We also measured a real multi-turn agent loop where the agent did multiple internal steps from a single user message. The runs were different lengths, so we aligned the comparison to the first 25 steps in each run.

Quick summary

| Metric | Before | After | Change |

|---|---|---|---|

| Total input tokens | 507,889 | 316,778 | -191,111 (~37.6%) |

| Avg tokens per step | 20,315.6 | 12,671.1 | -7,644.4 |

| Median tokens per step | 14,677 | 11,326 | -3,351 |

Step-by-step trend

Two patterns stand out. In the baseline run without any context engineering applied, input tokens climb almost linearly with each turn - there is no compaction, so the payload keeps growing. In the optimized run, the token curve stays lower overall and shows visible dips at compaction points, where old tool payloads were replaced with compact placeholders.

Dealing With the Downsides of Compaction

Compaction introduces a key risk: loss of reasoning context. Here's how we solved it.

Preserving Insights for Reasoning

If we remove raw tool results, how does the agent remember what mattered? We adopted a lightweight notekeeping pattern:

- Immediately after a tool call returns, the agent summarizes what matters.

- It stores that summary in a structured

<notes></notes>block. - On future turns, the agent reads the notes instead of the raw data.

For time-series emissions data, we ask the agent to capture:

- 5-7 representative data points

- Data source

- Last updated date

- Short trend summary

- Any other context that will matter later

Example:

<notes>

**Germany CO2 emissions (time series)**

Data source: https://data.example.org/emissions/germany

Last updated: 2024-11-12

Key data points:

- 2018: 810 Mt

- 2019: 780 Mt

- 2020: 700 Mt

- 2021: 720 Mt

- 2022: 690 Mt

- 2023: 645 Mt

Trend: Steady decline from 2018-2023 with a dip in 2020 and partial rebound in 2021. The energy sector drives most of the decline.

</notes>

Key Takeaways

- Compaction is a low-effort, high-impact fix once tool results dominate your context window.

- Keep raw results for the UI but strip them from the LLM request to prevent context rot.

- Preserve reasoning-critical facts with short, structured notes.

- Measure token impact to communicate ROI and guide iteration.

In the next post, we'll explore more advanced techniques beyond compaction - including reduction strategies and multi-agent context pipelines.

Ready to build enterprise-grade AI agents with strong context engineering?

Start building smarter agents with AvestaLabs:

- Expert consultation on context engineering best practices

- Complete RAG implementation with IngestIQ

- Agent monitoring and optimization with MetricSense

- Building AI Native products safely and reliably

Get started today: hello@avestalabs.ai

Software Engineer with more than a decade of experience in using technology to solve real-world problems and create value.