The Continuous Improvement (CI) Loop for AI Agents

Why This Matters

Whether you're in operations, support, marketing, engineering, or data, the idea of a continuous improvement loop is familiar: build → measure → learn. Anything user‑facing is a living system that keeps evolving with feedback.

With AI agents, this mindset is essential. Agents perceive, decide, and act. They get better only when we continually refine behavior, guardrails, and context. That’s the purpose of the Agent Continuous Improvement (CI) Loop.

💡 New to AI Agents? Start with this quick primer: From AI‑Assisted to Agentic AI: What's the Real Difference?

The Pitfalls of Static Agents

Shipping an AI agent is the easy half. Improving it is the hard half. The hardest part is agreeing on a method for improvement—repeatable, measurable, and safe.

- Agents drift from user needs without feedback.

- Small design flaws snowball into poor experiences.

- Weak guardrails risk irrelevant or unsafe behavior.

Real‑world example: A healthcare scheduling agent launches with a fixed set of appointment types and clinic hours. A month later, new services and weekend slots go live, but its context and evals aren’t refreshed. Patients see wrong availability and can’t book the new services—frustration and missed revenue. The problem isn’t deployment; it’s the lack of a feedback‑driven update process.

Without monitoring, evals, and iteration, agents stagnate and trust erodes.

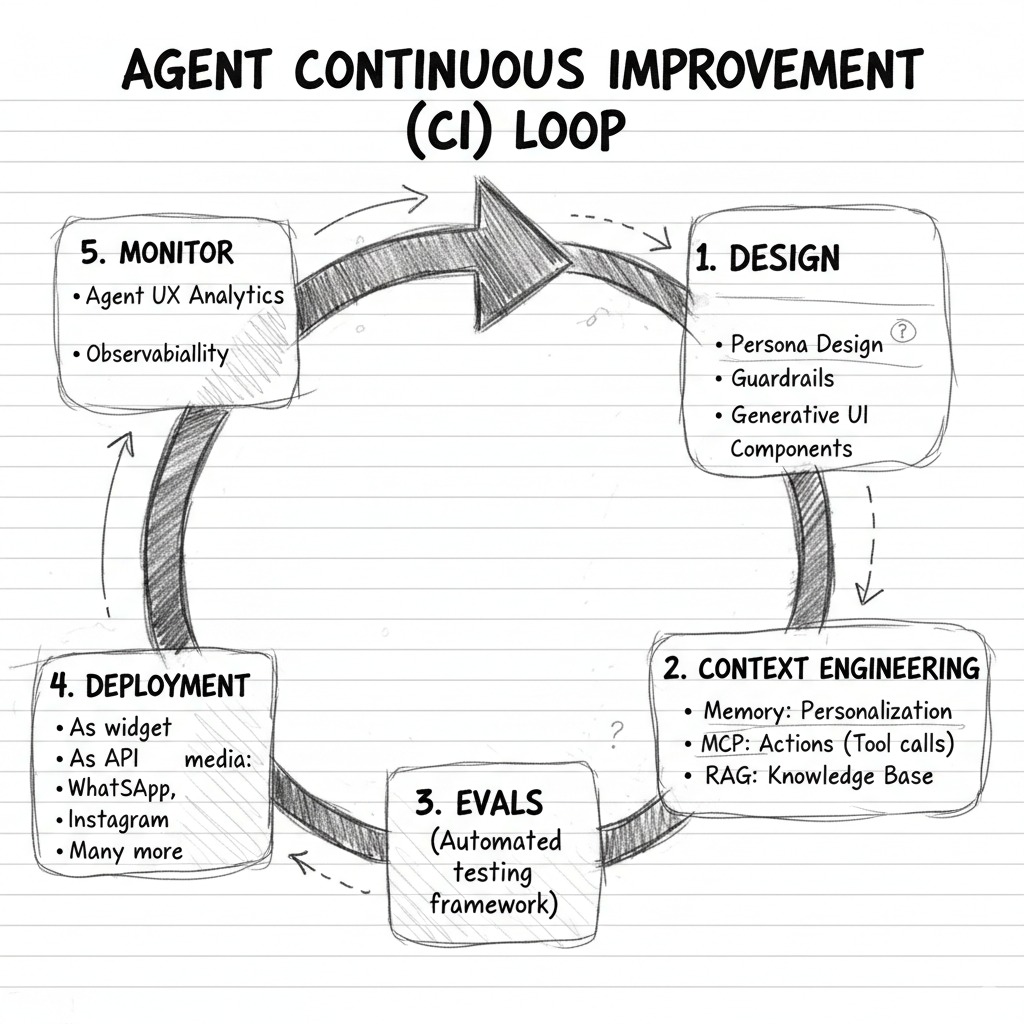

The CI Loop: Five Stages

The Agent CI Loop is the flywheel that keeps agents sharp, safe, and useful:

- Design — Define persona, goals, constraints, UI/UX, and guardrails. Make decisions observable and auditable.

- Context Engineering — Add memory for personalization, tool use (MCP), and knowledge access (RAG). Scope context tightly; prefer least‑privilege tools.

- Evals — Automate tests for alignment, reliability, regressions, and safety. Track failure modes with clear acceptance criteria.

- Deployment — Ship as widget, API, or channel apps (e.g., WhatsApp, Instagram). Version agents and configs; enable controlled rollouts.

- Monitor — Log conversations, tool calls, latencies, and outcomes. Use analytics and observability to surface drift and opportunities.

These stages form a continuous learning cycle—each turn reduces risk and increases utility.

Moving Forward

The future agent isn’t static code—it’s a learning colleague. With a CI loop in place, teams can evolve agents confidently—gaining usefulness, alignment, and trust with every iteration.

That’s the power of continuous improvement applied to AI: turning one‑off experiments into sustainable, ever‑improving digital products.

Put a CI Loop Around Your AI Agents

Partner with Avesta Labs to implement a lightweight, production‑ready CI loop for your AI agents:

- Design: personas, guardrails, and auditability

- Context engineering: memory, tools (MCP), and scoped RAG

- Evals: coverage, regression, and safety testing

- Deployment: versioning and staged rollouts

- Monitoring: analytics, drift detection, and insights

Questions ? Reach out to us at hello@avestalabs.ai

Software Engineer with more than a decade of experience in using technology to solve real-world problems and create value.